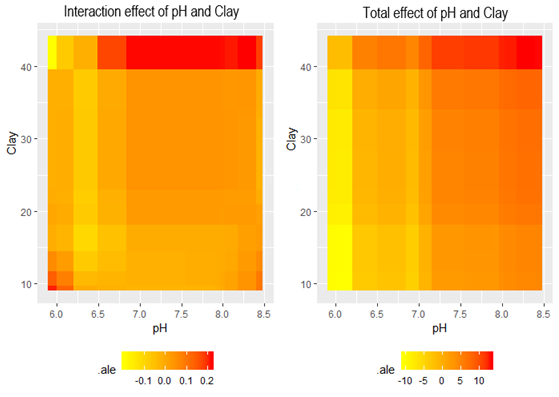

Explainable artificial intelligence for trial field analysis

a Supper & Supper Use Case

Other Use Cases in this category

Explainable artificial intelligence for trial field analysis a Use Case lesen

Automated and user-friendly Data Analysis Pipeline for Agricultural Field Trials Use Case lesen

Crowd Control - Using AI to track people counts Use Case lesen