Industrial Anomaly Detection in manufacturing

a Supper & Supper Use Case

Project Goal

The goal of this project was to detect anomalous behavior in machinery as well as mechanical and industrial equipment without prior information about what anomalous behavior consists of. Preemptively detecting anomalies in manufacturing and production processes paves the path towards a more efficient future for industries and factories. In this project, we used state-of-the-art machine learning tools to ensure precise anomaly detection in industrial processes, allowing for early identification of such anomalous behavior.

What are anomalies in manufacturing?

Anomalies in manufacturing refer to deviations in the operation of a (manufacturing/industrial engineering/technical) system’s operation from its intended or normal behavior. Such deviations can decrease performance, leading to instabilities, security issues, defects, and even system failure. Given the intricate dynamics of these systems, pinpointing the causes of these anomalies can be challenging.

Other Use Cases in this category

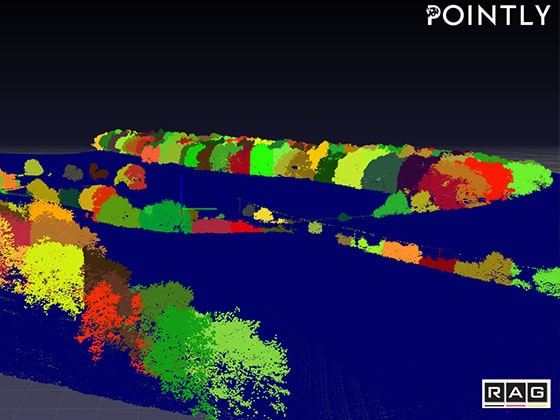

The automated generation of CAD models from 3D highway Use Case lesen

The automatic detection of different trucks in orthophotos with Use Case lesen

The automated conduction of a forest inventory Use Case lesen